Linear Regression

Here I discuss how violation of linear regression assumptions affect model explainability and foresting performance. It is a practical guide on the caveats of the Linear Model and how to deal with them - particularly useful in forecasting-focused settings like Kaggle. It is assumed the reader is familiar with the mathematics behind the model.

Linear model's assumptions are:

- The target variable is linear in the predictor variables. Violation is also known for mispecified model

- The errors are normally distributed, independent, and homoscedastic (constant variance).

- The errors are independent of the features (no endogeneity).

- The features are not multi-collinear (not highly correlated).

Example when each of these assumptions is violated:

- Non-linear relationship:

- Correlated errors: time series data with autocorrelated residuals

- Heteroscedasticity: variance of errors increases with the value of x (leverage effect, further from mean pull the line more)

- Endogeneity: omitted variable that affects that affects both x and y

Multi-Collinear Features

- Multicolinearity affects model interpretability and not so much forecasting performance.

Experiment:

- Run LR

y ~ x1 - Run LR

y ~ x1+x2, where these two are highly correlated

Results

Uncorrelated features correlation:

Uncorrelated case model summary:

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.929

Model: OLS Adj. R-squared: 0.928

Method: Least Squares F-statistic: 1275.

Date: Tue, 08 Jul 2025 Prob (F-statistic): 5.50e-58

Time: 08:19:04 Log-Likelihood: -78.314

No. Observations: 100 AIC: 160.6

Df Residuals: 98 BIC: 165.8

Df Model: 1

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 5.0443 0.054 93.689 0.000 4.937 5.151

X1 2.1139 0.059 35.712 0.000 1.996 2.231

==============================================================================

Omnibus: 3.154 Durbin-Watson: 2.216

Prob(Omnibus): 0.207 Jarque-Bera (JB): 3.133

Skew: 0.105 Prob(JB): 0.209

Kurtosis: 3.841 Cond. No. 1.16

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

Highly correlated features correlation:

X1 X2

X1 1.000000 0.999931

X2 0.999931 1.000000

Highly correlated case model summary:

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.939

Model: OLS Adj. R-squared: 0.938

Method: Least Squares F-statistic: 751.5

Date: Tue, 08 Jul 2025 Prob (F-statistic): 9.10e-60

Time: 08:19:04 Log-Likelihood: -63.278

No. Observations: 100 AIC: 132.6

Df Residuals: 97 BIC: 140.4

Df Model: 2

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 4.9343 0.047 105.551 0.000 4.842 5.027

X1 6.8089 4.486 1.518 0.132 -2.095 15.713

X2 -4.7588 4.475 -1.064 0.290 -13.639 4.122

==============================================================================

Omnibus: 0.401 Durbin-Watson: 2.118

Prob(Omnibus): 0.818 Jarque-Bera (JB): 0.103

Skew: -0.034 Prob(JB): 0.950

Kurtosis: 3.141 Cond. No. 174.

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

Insights:

- Forecasts of both model are good and in practice you don't need to drop the correlated features if you care about forecasts

- Remember that the forecast is the orthogonal projection of the real value y on the subspace spanned by the design matrix . If the design matrix has correlated features there are multiple ways to express the same . Hence different values for the would give the same forecast.

- When there are correlated features you will see large variance in the beta estimates, also mathematically will just be very large when the inside matrix is not positive definite.

- large beta variance, means low t-statistic, which means high p-value

- hence with correlated features the model is not interpretable but the sum is good forecasts.

The Geometry

- When two variables are highly correlated, they both almost point in the same “direction” in the predictor space.

- The model tries to allocate credit (and adjust slope) between them for explaining changes in y.

- Many combinations of coefficients can fit the same plane equally well.

Effect on Model Fit

- The overall plane/surface that regression fits (ŷ = intercept + b1X1 + b2X2) can still be the same, though b1 and b2 may be weird or unintuitive (like 378 and -377). Forecasts are still good.

- The regression is “sure” about the sum effect, but not about the individual effects.

Unstaple coefficients

- Opposite signs and very large in absolute values to offset each other.

- For forecasting, this generally does not hurt test-set predictive performance as long as your train/test data comes from the same distribution and the correlation patterns are stable. It can, however, make your model more sensitive to changes in the feature distribution ("unstable predictions" with changing data).

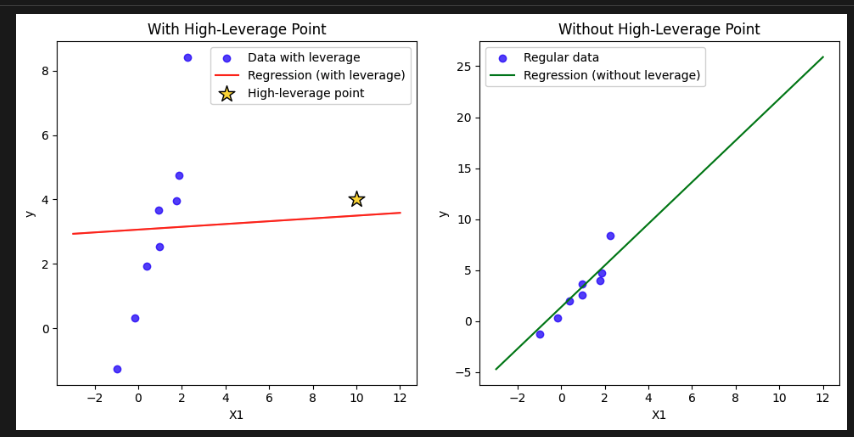

High Leverage Points

- identify outliers in the design matrix (not in target variable )

- Property of: Only the feature/design matrix X

- Definition: Measures how far an observation's x-values are from the mean of all x-values, i.e., how "unusual" its X-row is.

- Mathematically: The diagonal elements of the “hat” matrix .

- Note: Leverage is completely independent of .

- outliers in the design matrix can lead to the picture below:

- if a row deviates from the mean of it will have large leverage and pull the regression line

NOTE: In regression, leverage is a property of observations (rows, i.e. data points), not of features (columns, variables).

- You need to check rows that are with high leverage not features/columns!

# Model Spec

# Generate 8 normal data points

X1 = np.random.normal(0, 1, 8)

X2 = np.random.normal(0, 1, 8)

y = 2*X1 + 3*X2 + np.random.normal(0, 0.5, 8)

# Add a high-leverage point (extreme X1)

X1_leverage = np.append(X1, 10)

X2_leverage = np.append(X2, 1)

y_leverage = np.append(y, 4)

Results

Model with leveraged point

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.526

Model: OLS Adj. R-squared: 0.368

Method: Least Squares F-statistic: 3.328

Date: Tue, 08 Jul 2025 Prob (F-statistic): 0.107

Time: 09:31:52 Log-Likelihood: -18.040

No. Observations: 9 AIC: 42.08

Df Residuals: 6 BIC: 42.67

Df Model: 2

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 1.0550 1.093 0.965 0.372 -1.620 3.730

X1 0.0434 0.282 0.154 0.883 -0.647 0.734

X2 3.9627 1.857 2.134 0.077 -0.580 8.505

==============================================================================

Omnibus: 0.114 Durbin-Watson: 1.807

Prob(Omnibus): 0.944 Jarque-Bera (JB): 0.321

Skew: 0.113 Prob(JB): 0.852

Kurtosis: 2.103 Cond. No. 9.95

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

Model without leveraged point

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.972

Model: OLS Adj. R-squared: 0.961

Method: Least Squares F-statistic: 88.04

Date: Tue, 08 Jul 2025 Prob (F-statistic): 0.000127

Time: 09:31:52 Log-Likelihood: -5.0804

No. Observations: 8 AIC: 16.16

Df Residuals: 5 BIC: 16.40

Df Model: 2

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 0.2898 0.299 0.968 0.377 -0.480 1.059

X1 2.0443 0.233 8.772 0.000 1.445 2.643

X2 2.1176 0.528 4.008 0.010 0.759 3.476

==============================================================================

Omnibus: 3.584 Durbin-Watson: 2.783

Prob(Omnibus): 0.167 Jarque-Bera (JB): 1.223

Skew: -0.958 Prob(JB): 0.543

Kurtosis: 3.002 Cond. No. 4.46

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

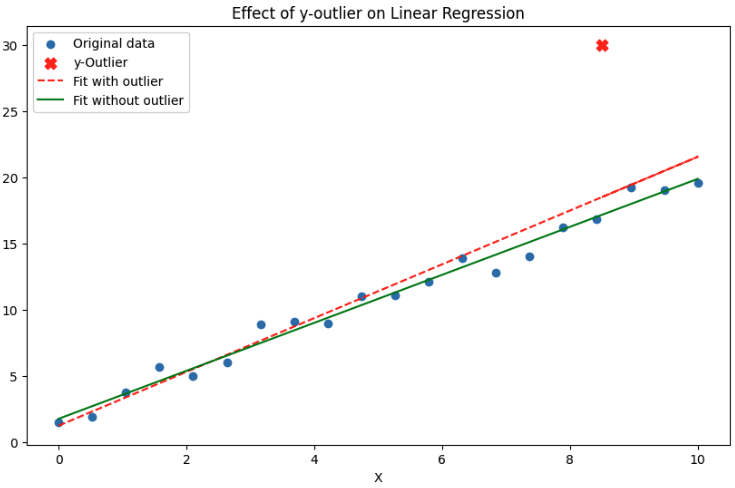

Residuals and Cook's Distance

- identify outliers in target variable

- Studentised residuals and cook's distance use leverage and residual error to find outliers in

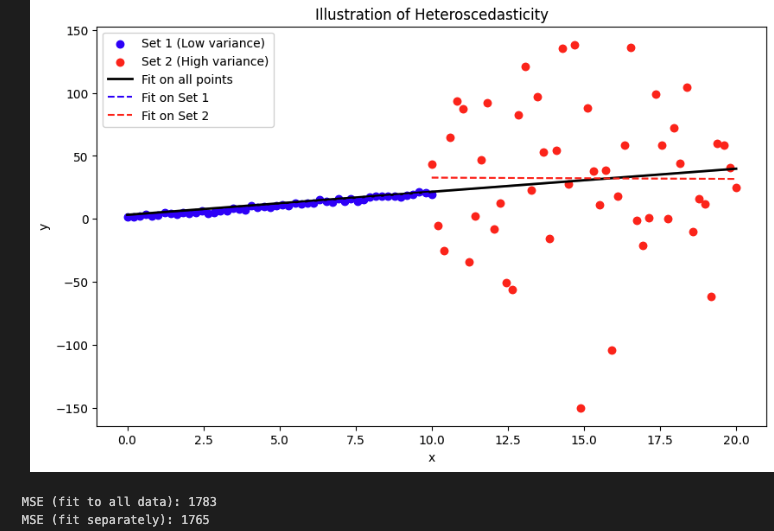

Homo or Hetero

- Homoscedacity is the assumption that residuals are with equal variance.

CODE

import numpy as np

import matplotlib.pyplot as plt

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

def generate_data(seed=42, n1=50, n2=50):

np.random.seed(seed)

x1 = np.linspace(0, 10, n1)

y1 = 2 * x1 + 1 + np.random.normal(0, 1, n1)

x2 = np.linspace(10, 20, n2)

y2 = 2 * x2 + 1 + np.random.normal(0, 69, n2) # High variance, Different mean

return x1, y1, x2, y2

def fit_single_model(x, y):

model = LinearRegression().fit(x.reshape(-1, 1), y)

y_pred = model.predict(x.reshape(-1, 1))

return model, y_pred

def fit_two_models(x1, y1, x2, y2):

model1 = LinearRegression().fit(x1.reshape(-1, 1), y1)

y1_pred = model1.predict(x1.reshape(-1, 1))

model2 = LinearRegression().fit(x2.reshape(-1, 1), y2)

y2_pred = model2.predict(x2.reshape(-1, 1))

return model1, y1_pred, model2, y2_pred

def plot_results(x1, y1, x2, y2, X_all, y_pred_all, y1_pred, y2_pred):

plt.figure(figsize=(10, 6))

plt.scatter(x1, y1, label='Set 1 (Low variance)', color='blue')

plt.scatter(x2, y2, label='Set 2 (High variance)', color='red')

plt.plot(X_all, y_pred_all, label='Fit on all points', color='black', linewidth=2)

plt.plot(x1, y1_pred, label='Fit on Set 1', color='blue', linestyle='--')

plt.plot(x2, y2_pred, label='Fit on Set 2', color='red', linestyle='--')

plt.legend()

plt.xlabel('x')

plt.ylabel('y')

plt.title('Illustration of Heteroscedasticity')

plt.show()

def calculate_mse(y_true, y_pred):

return mean_squared_error(y_true, y_pred)

def main():

# Generate data

x1, y1, x2, y2 = generate_data()

X_all = np.concatenate([x1, x2]).reshape(-1, 1)

y_all = np.concatenate([y1, y2])

# Fit models

model_all, y_pred_all = fit_single_model(np.concatenate([x1, x2]), y_all)

model1, y1_pred, model2, y2_pred = fit_two_models(x1, y1, x2, y2)

# Plot

plot_results(x1, y1, x2, y2, np.concatenate([x1, x2]), y_pred_all, y1_pred, y2_pred)

# MSE calculations

mse_all = calculate_mse(y_all, y_pred_all)

y_sep_pred = np.concatenate([y1_pred, y2_pred])

mse_sep = calculate_mse(y_all, y_sep_pred)

print("MSE (fit to all data):", round(mse_all))

print("MSE (fit separately):", round(mse_sep))

main()

It reduces a bit the forecasting accuracy.

Summary of the results:

=== Regression summary: All Data ===

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.059

Model: OLS Adj. R-squared: 0.050

Method: Least Squares F-statistic: 6.194

Date: Thu, 10 Jul 2025 Prob (F-statistic): 0.0145

Time: 08:37:53 Log-Likelihood: -516.21

No. Observations: 100 AIC: 1036.

Df Residuals: 98 BIC: 1042.

Df Model: 1

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 3.2053 8.499 0.377 0.707 -13.661 20.072

x1 1.8295 0.735 2.489 0.015 0.371 3.288

==============================================================================

Omnibus: 21.818 Durbin-Watson: 2.191

Prob(Omnibus): 0.000 Jarque-Bera (JB): 73.588

Skew: -0.605 Prob(JB): 1.05e-16

Kurtosis: 7.025 Cond. No. 23.2

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

=== Regression summary: Set 1 (Low variance) ===

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.975

Model: OLS Adj. R-squared: 0.975

Method: Least Squares F-statistic: 1903.

Date: Thu, 10 Jul 2025 Prob (F-statistic): 2.81e-40

Time: 08:37:53 Log-Likelihood: -66.142

No. Observations: 50 AIC: 136.3

Df Residuals: 48 BIC: 140.1

Df Model: 1

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 1.0644 0.258 4.120 0.000 0.545 1.584

x1 1.9420 0.045 43.622 0.000 1.853 2.032

==============================================================================

Omnibus: 0.453 Durbin-Watson: 1.942

Prob(Omnibus): 0.798 Jarque-Bera (JB): 0.608

Skew: 0.156 Prob(JB): 0.738

Kurtosis: 2.559 Cond. No. 11.7

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

=== Regression summary: Set 2 (High variance) ===

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.000

Model: OLS Adj. R-squared: -0.021

Method: Least Squares F-statistic: 0.001247

Date: Thu, 10 Jul 2025 Prob (F-statistic): 0.972

Time: 08:37:53 Log-Likelihood: -275.16

No. Observations: 50 AIC: 554.3

Df Residuals: 48 BIC: 558.1

Df Model: 1

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 33.7690 44.502 0.759 0.452 -55.707 123.245

x1 -0.1028 2.911 -0.035 0.972 -5.956 5.751

==============================================================================

Omnibus: 4.219 Durbin-Watson: 2.211

Prob(Omnibus): 0.121 Jarque-Bera (JB): 3.105

Skew: -0.535 Prob(JB): 0.212

Kurtosis: 3.587 Cond. No. 79.7

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

These results show how when you fit one for all it has low mainly due to the large errors by high variance data. You will miss the signal in the low variance data (p-value for x1 is higher in the fit all model).

Loss Function, Likelihood, Pearson Correlation

- Under normality and homoscedasticity assumptions, the OLS loss function is equivalent to the negative log-likelihood of the Gaussian distribution.

- Also it is equivalent to the Pearson correlation coefficient between the predicted and actual values.

Independent Errors Assumption

- The assumption that the errors are independent of each other.

- statsmodels has a fix for p-values and varaince estimae (still fits normal OLS) but gives different summary results.

X_const = sm.add_constant(x)

ols_model = sm.OLS(y_ar1, X_const).fit()

robust_model = ols_model.get_robustcov_results(cov_type='HAC', maxlags=1)

Computation and Optimization

Solving Ax = b

Direct Inverse

-

Strict inverse using QR decomposition, Q is orthogonal matrix, R is upper triangular. You can solve using back substitution. Recursively solving for each element of x starting from the last row up to the first row.

-

is run time (matrix inversion is )

-

Use Gram-Schmidt to build QR decomposition

-

run time

-

Gram-Schmidt is doing regression on each feature one by one, orthogonally projecting the residuals onto the next feature.

-

Your book "Elements of Statistical Learning" has the exact algo.

-

In theory you can use Gaussian Elimination to calculate the inverse of A. This is when you "attach" the identity matrix to A and do row operations to convert A to identity matrix, the identity matrix will become A inverse. These operations are echelon form and are run time.

Pseudo Inverse

- Produces more stable results and is strictly better than direct inverse in terms of forecasts

- The big-O run time could be larger for well-defined invertible matrices, but in practice it is faster.

- pseudoinverse instead of blowing up values close to 0 it suppresses them naturally giving stable results

- SVD* decomposition is used to compute the pseudoinverse

A = U @ SIGMA @ V^T -> A^+ = V @ SIGMA^+ @ U^T- U and V are orthonormal matrices

Decomposition Methods

- LU decomposition: where L is lower triangular and U is upper triangular. Used to solve Ax=b by solving Ly=b and then Ux=y (simpler than taking the inverse directly)

- Cholesky decomposition (subcase of LU decomposition): for symmetric positive definite matrices.

- QR decomposition: where Q is orthogonal and R is upper triangular. Used to solve Ax=b by solving Rx=Q^Tb.

- SVD decomposition: where U and V are orthogonal and Σ is diagonal. Used for pseudoinverse and dimensionality reduction.

#TODO check how to implement Gram-Schmidt in numpy/scipy.

I tested in practice, the Gram-Schmidt was slower, though.

CODE

iimport numpy as np

import time

from numpy.linalg import inv, solve

from scipy.linalg import qr

# Function for normal equation (direct inversion)

def normal_equation(X, y):

XtX = X.T @ X

Xty = X.T @ y

beta = inv(XtX) @ Xty

return beta

# Function for QR decomposition

def qr_solution(X, y):

Q, R = qr(X, mode='economic')

beta = solve(R, Q.T @ y)

return beta

# Simulation parameters

n = 50000 # number of samples (can increase for stress testing)

p = 200 # number of features

np.random.seed(0)

X = np.random.randn(n, p)

y = np.random.randn(n)

# Run and time the normal equation

start = time.time()

beta_normal = normal_equation(X, y)

time_normal = time.time() - start

print(f"Normal Equation Time: {time_normal:.4f} seconds")

# Run and time the QR decomposition

start = time.time()

beta_qr = qr_solution(X, y)

time_qr = time.time() - start

print(f"QR Decomposition Time: {time_qr:.4f} seconds")

# Check the difference in solution (should be very small!)

diff = np.linalg.norm(beta_normal - beta_qr) # sum(abs(diff))^(0.5)

print(f"L2 norm of difference between solutions: {diff:.2e}")

# Output

'''

Normal Equation Time: 0.0389 seconds

QR Decomposition Time: 0.5719 seconds

L2 norm of difference between solutions: 5.85e-16

'''

Feature Selection

- Forward or backward selection methods are O(n^2)

- Can use regularization for feature selection

- In practice if you have many features that are noisy or collinear, regularization will help with forecasting performance.

- however if you choose the top correlated with the target features that could outperform fitting regularized LR on all features, since you'd try to fit noise. Lasso might remove the noisy features but it still struggles.

Regularization

Regularization can be used in two ways:

- to reduce the variance of the model

- to perform feature selection

Feature selection and regularization can be connected

- They can be seen as L0 regularization (penalizing the number of non-zero coefficients)

- L1 and L2 regularization are convex problems. The loss with regularization is a SUM OF CONVEX functions!

- Minimizing the loss functions is equivalent to solving a constrained optimization problem = minimizing OLS + L1/L2 constraint on the coefficients.

- when you think of the constrained problems you can draw the OLS loss and the L1/L2 constraints and see where they intersect.

- L2 constraint is a circle, L1 is a diamond. The corners of the diamond are more likely to intersect with the OLS loss contours leading to sparse solutions. In multiple dimensions the L1 constraint is a hypercube with many corners.

Solutions:

If X is orthonormal, then the solutions to lasso and ridge are:

- lasso: sign(beta) * max(|beta|-lambda,0) where beta is the solution to OLS

- ridge: beta / (1+lambda)

Ridge

- Ridge (L2) regularization is a scaling regularization (divides the OLS solution by a factor (1+lambda))

- Ridge regularization is PCA regression with soft-threshold. It shrinks the coefficients of the principal components. It shrinks more the coefficients with low variance (less important components)

- Ridge is equivalent to maximizing the posterior mean with prior beta ~ Normal(0,rho). then lambda = sigma/rho (sigma is the error variance)

Lasso

- Lasso (L1) regularization

- sparse solutions (think of the constraint problem is a diamond)

- convex problem - no closed form solution - use coordinate descent, stochastic descent or LARS

- Lasso is equivalent to maximizing the posterior mode with prior beta ~ Laplace(0,b). then lambda = sigma/b (sigma is the error variance)

Say you have MSE with constraint |beta| > 5, then this is NOT convex - you could be on the wrong side of the parabola.

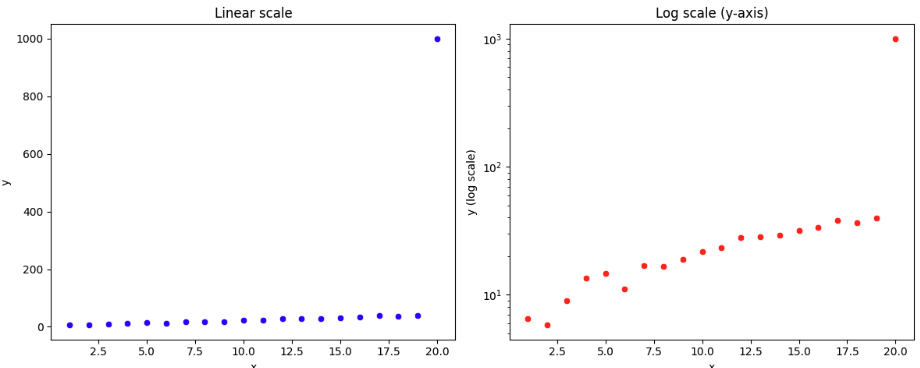

Log Scale

Make better visualizations when there is an outlier in the target variable.

Note how the y axis does not have equally distributed points. from 10^1 to 10^2 is the same length as from 10^2 to 10^3

CODE

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# Sample data: 20 points lying roughly on a line, plus one big outlier

np.random.seed(0)

x = np.arange(1, 21)

y = 2 * x + 1 + np.random.normal(scale=2, size=x.size)

y[-1] = 1000 # outlier

df = pd.DataFrame({'x': x, 'y': y})

fig, axs = plt.subplots(1, 2, figsize=(12, 5))

# Linear scale scatter plot

sns.scatterplot(x='x', y='y', data=df, ax=axs[0], color='b', marker='o')

axs[0].set_title("Linear scale")

axs[0].set_xlabel("x")

axs[0].set_ylabel("y")

# Log scale scatter plot

sns.scatterplot(x='x', y='y', data=df, ax=axs[1], color='r', marker='o')

axs[1].set_yscale('log')

# equivalent to:

# axs[1].scatter(df['x'], np.log(df['y']), color='r')

axs[1].set_title("Log scale (y-axis)")

axs[1].set_xlabel("x")

axs[1].set_ylabel("y (log scale)")

plt.tight_layout()

plt.show()

- When all points are close together (except for the outlier), the outlier dominates the linear y-range, which squeezes the other data against the axis.

- With log scale, both the regular points and the outlier are shown in proportion, so you can see ALL points—even values that differ by orders of magnitude.