Probability

Notes on Oxford lectures and solutions/answers to all problems from this book.

Buzzwords

Sample space, events, probability measure. Sampling with or without replacement. Conditional probability = partition of sample space, law of total probability/total expectation, Bayes’ Theorem. Independence.

variance, covariance, zero covariance does not imply independence, in normal distributions zero covariance = independence

Discrete radom variablers, pmf = probability mass function, Marginal and conditional distributions, first and second order linear difference equations (fibonacci), random walk, Gambler's ruin.

Poisson interpretation: The number of occurrences during a time interval of length is a Poisson-distributed random variable with expected value .

Po is approximation of Bin for large , derive from pmf of binomial.

Poisson limit therem, law of rare events, poisson approximation of binomial (less computationally expensive), exponential distribution is the poisson discrete equivalent

negative binomial is sum of geometric distributions, binomial is sum of bernoulli distributions

is sum of gamma distributions

sum of exponential distributions is

probability generating function, branching processes, solution to probability of extinction , random sum formula where , iid and independent from . use it to prove (alternatively use total law of expectation) . This solves problems with stopping times too.

use pgfs to compute , hint .

pgf helps to prove sum of independent distribution is another distribution use and that pgf defines uniquely the distribution

use pgf's to compute distribution. , eval derivatives at 0, need to be easility differentiable

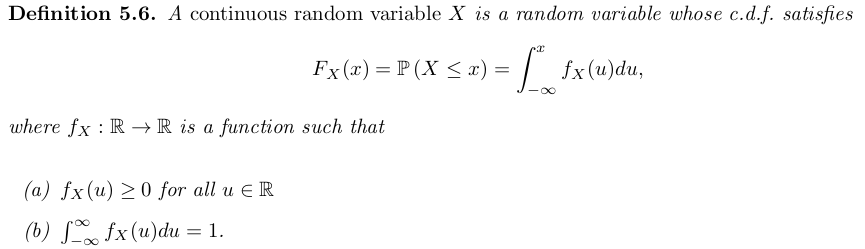

cts random variables, cdf, pdf

pdf has similar properties as pmf but is NOT a probability.

sample any cdf from uniform samples.

, need to be non-negative random variable. Prove that using swap integrands (Tonelli's theorem).

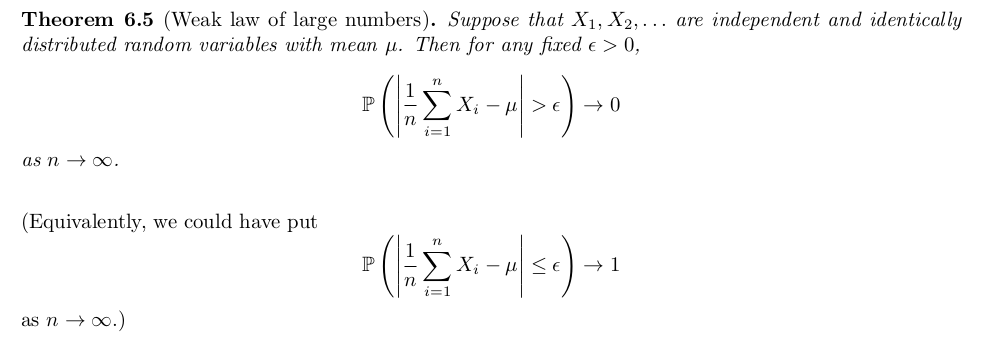

Random sample, sums of independent random variables. Markov’s inequality, Chebyshev’s inequality, Weak Law of Large Numbers.

mixture distributions good for bayesian modelling

- Eulers's formula (Riemann zeta function expressed with prime numbers) has cool probabilistic proof. Check sheet 2 last problem.

Chapter 1. Events and probability

Set of all possible outcomes is called the sample space. S subset of is called an event.

For events and we can do set operations:

-

means at least one of the two occurs

-

means both occur

-

means occurs but does not

We assign probabilities to events.

Counting. Number of permutations of distinct elements is

Binomial coefficient .

Binomial theorem expands . Proof by counting.

Bijectionargument in counting problems

Example How many distinct non-negative intger-valued solutions of the equation are there?

- use sticks argument

Lemma. Vandermonde’s identity

Use Breaking things down argument

Countable sets are those which you can label (i.e. map to the integer space), Uncountable sets cannot be labelled like

Definition 1.5. A probability space is a triple where

- is the sample space,

- is a collection of subsets of , called events, satisfying axioms F1 –F3 below,

- is a probability measure, which is a function satisfying axioms P1 –P3 below.

The axioms of probability

is a collection of subsets of Ω, with:

- F1 : ∅ ∈ . empty event is in

- F2 : If , then also . An event and its complementary are both in

- F3 : If {Ai , i ∈ I} is a finite or countably infinite collection of members of , then . has the notion of closure.

is a function from to , with:

- P1 : For all .

- P2 : . All events have probability 1.

- P3 : If {Ai , i ∈ I} is a finite or countably infinite collection of members of , and for , then $P(∪A_i ) = \sum P(A_i) $ Distributivity of union over intersection.

P3 would not be true if it was just for pairwise sets. The above is stronger!

Theorem 1.9. Suppose that is a probability space and that . Then

- ;

- If then .

Definition 1.11. Let be a probability space. If and then the conditional probability of given is

Probability space is a powerful thing. You have all the axioms above to be true!

Lemma If , then for any event , if you swap then is a probability space too! That is if you condition your probability space on certain event you still have all the axioms.

Independece Events and are independent if .

A family of events is independend if

PAIRWISE INDEPENDENT DOES NOT IMPLY INDEPENDENCE.

and independent imply and are independent.

Theorem 1.20 (The law of total probability). Suppose is a partition of by sets from , such that for all . Then for any ,

.

(partition theorem)

Bayes theorem = Conditional probability + law of total probability

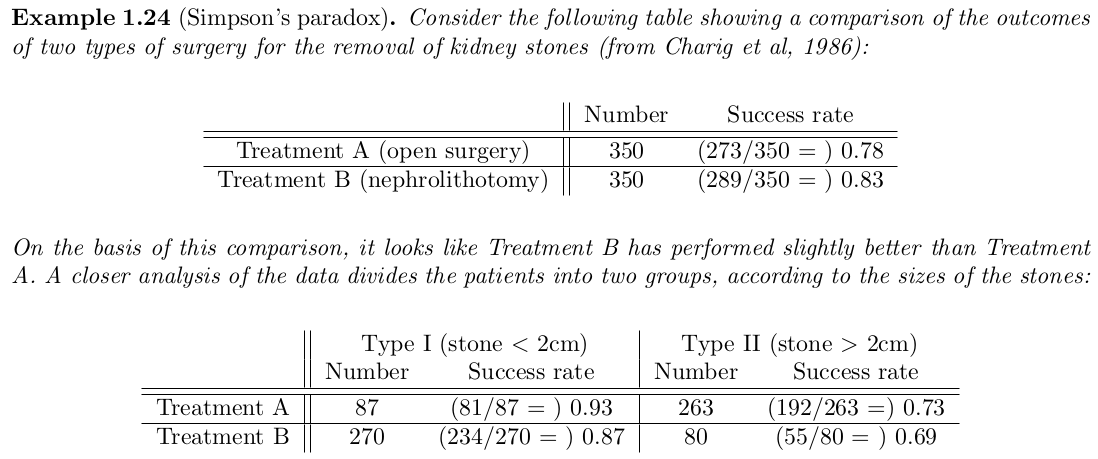

Simpson’s paradox

Problems

Solutions to 1.11 from the book.

Q1. Condition on first event and do linear differencing equation. Homogeneous and particular solution.

. Can use indeuction too.

Note this is a binomial distribution and we compute probability we have even outcome. Can expend

Q2. No. Finate spaces should be power of two.

Q3. By induction. Use union operation is associative

Q4. By Q3 and

Q5. Example of pairwise independence (3 events) that does not imply independence of all 3 events .

Q6. Conditional probability + Bayes. 79/140, 40/61

Q7. 3 spades sequences/all sequences

Q8. Binomial distribution and expand Stirling.

Q9. Contidional probability + Binomial disribution + Vandermonde’s identity

Q10. Skip physics

Q11. Law of total probability (parititon theorem). They want to get the eight element so can do manually with iteration. I don't see easy way to solve this difference equation by hand?

#TODO Q12. Extra hard, did not solve it. stack, normal numbers, Champernowne constant

Q13. Conditional probability + algebra iteration... Goal is to get differencing equation in each variable e.g.

Q14. a) Inclusion-exclusion principle. b)

Q15. Condition on k cards match. Then use incllusion exclusion principle.

Q16. Conditional probability on when 8:45 and 9:00 trains come.

Q17. #TODO

Q18 #TODO

Q19.

Chapter 2. Discrete random variables

Encode information about an outcome using numbers

Definition 2.1. A discrete random variable X on a probability space is a function such that

- (a) for each ,

- (b) is a finite or countable subset of .

(a) says lives in , that is it is an event and we can assign probability.

(b) is the definition of discrete.

Definition 2.3. A probability mass function (pmf) of a random variable is s.t.:

- for all ,

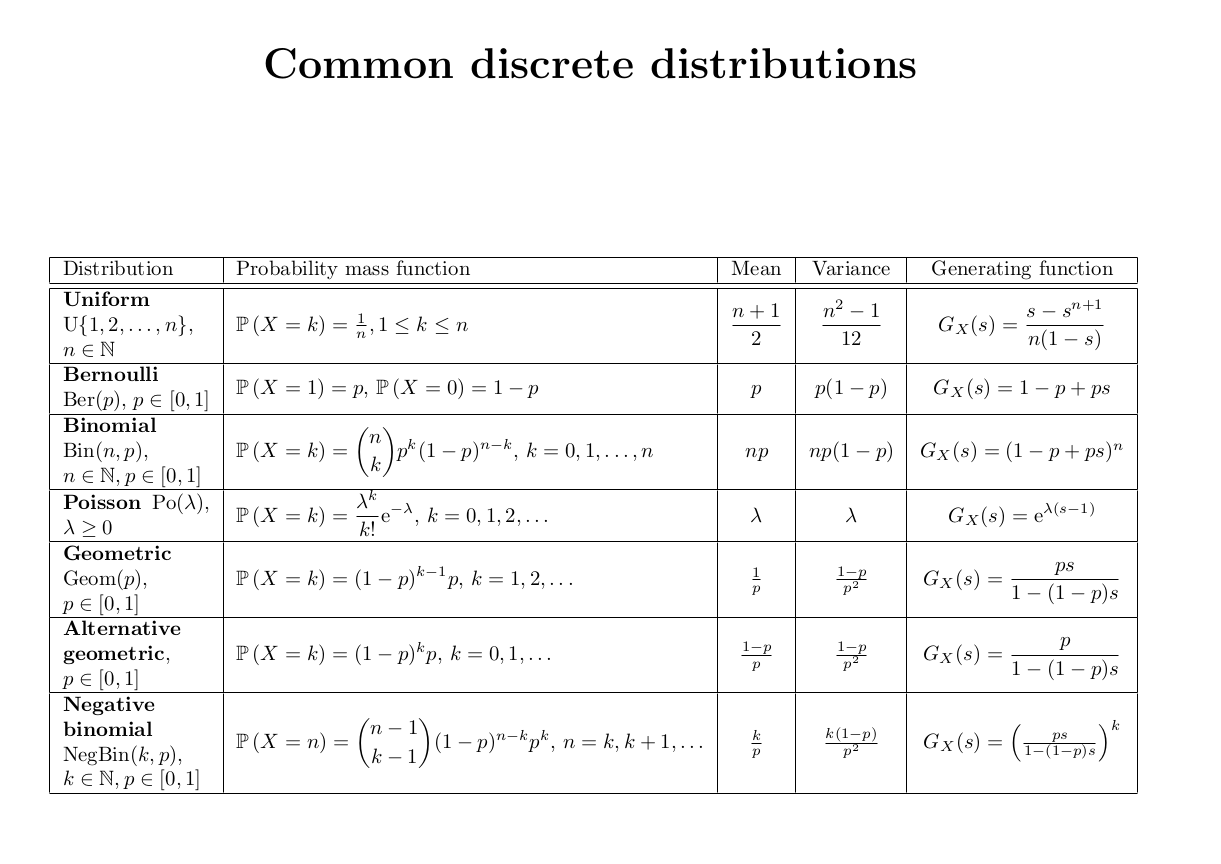

Classic discrete distributions:

- Bernoulli

- Binomial

- Geometric

- Poisson

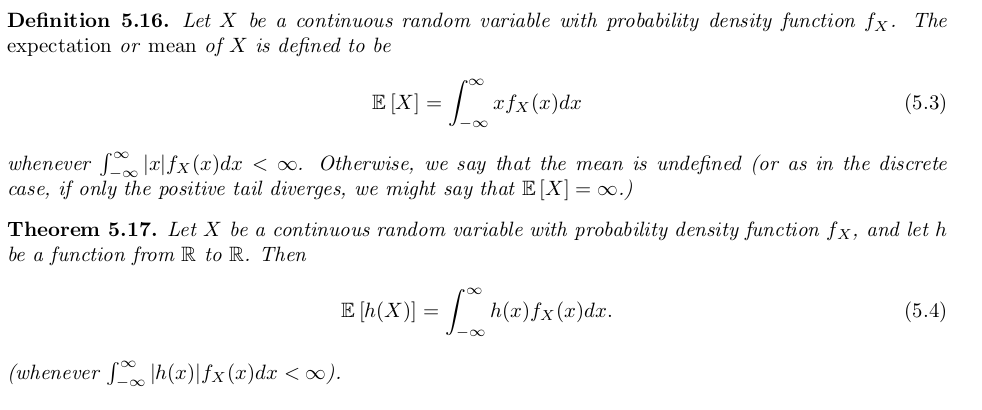

Definition 2.6 The expectation (or expected value or mean) of is

provided that , otherwise it does not exist. We require absolutely convergent series sum.

The expectation of is the ‘average’ value which takes.

Theorem.

Linearity of expectation:

Definition 2.11. For a discrete random variable , the variance of is defined by:

, provided that this quantity exists.

Variance gives size of fluctuations around the expectation.

Theorem 2.14 (Law of total expectation = Partition theorem for expectations).

Joint pmf is

Marginal distribution exists for joint distribution and is just integrating/summing out one of the variables

Theorem 2.23 If and are independent, then . Reverse is NOT true.

Definition .

i.i.d. = independent and identically distributed

Problems

Solution from chapter 2.6 in book.

Q1.

Q2.

Q3. Discrete RV with zero variance then, the RV is eqaul to the mean. In cts RV, almost surely (degenerate distribution).

Q4. need convergence, Rieman zeta function

Q5. Lack of memory proerty of Geometric distribution. Kvot bilo bilo.

Q6.

- Proof by visualization.

- coupun collection problem, linearity of exp.

Q7. harmonic series

Q8. #TODO

Q9. Expected tosses till see consecutive heads . Write it using Markov chain state technique and goal is to compute , with boundary condition To solve it you need to take difference of consecutive equations and do telescoping sum like idea.

Q10. #TODO

Chapter 3. Difeerence equations and random walks

Theorem 3.3 The general solution to a difference equation:

can be written as where:

- is a particular solution to the equation (resembles )

- solves the homogeneous equation

To solve the homogeneous equation when you have you need to get the auxilary equation - substitute in the homogenous equat and the roots of it gives you the general form of the solution to the homogeneous equation. For it looks like:

If , then try

To solve the particular equation try solutions similar to if it fails try the next most complicated thing.

Gamblers ruin:

, where if gambler has money. Boundary conditions: .

This is second order difference equation. If you remove the boundary you need to take limits .

is . To prove that formally you need to use that for an increasing set of events you have .

Gamblers ruin for expectation number of steps to hit absorbing stage.

, with bc

Problems

Solution to problems from Chapter 10.5

Q1. can be expressed using two expressions. Think about the two walks separately and combined as in one walk.

Q2. Gamblers ruin with different parameters.

Q3. Condition on first step and use gamblers ruin solution for .

Q4. Skipped, to do property need to take limit as and define

#TODO finish questions, might need to read chapter. It is more comprehensive than the lecture notes

Chapter 4 Probability generating functions

The probability generating function of a discrete random variable is a power series representation of its pmf. It is defined only for discrete random variables.

where such that the expectation exists.

Theorem 4.2. The distribution of is uniquely determined by its probability generating function, .

The th derivative of evaluated at zero gives .

Theorem 4.3 If and are independent, then

Use the above two theorems to prove that sum of independent Bernoulli random variables is a Binomial random variable.

Sum of iid Poisson rvs is Poisson rv with parameter = sum of parameters.

Calculate moments by taking derivative of the pgf and evaluate at

Theorem 4.8. Let be i.i.d. non-negative integer-valued random variables with p.g.f. . Let be another non-negative integer-valued random variable, independent of and with p.g.f. . Then the p.g.f. of is .

This chain trick appears in branching processes. Start with one individual which gives birth to many children. In generation each individual in the population gives children. The pgf of total number of individuals in generation satisfies Where is the pgf of random variable giving number of children for 1 individual. By induction you can express as nested n times.

Extintion probability.

is solution to probability of extinction!

, where we condition on the number of children of the first individual.

This equation always have solution at 1. However you cannot solve it for .

You need to go this way: using the fact about increasing sequences of events.

It turns out that the question of whether the branching process inevitably dies out is determined by the mean number of children of a single individual. Then we find that there is a positive probability of survival of the process for ever if and only if . (Theorem 4.15 proves it)

Problems

Problems from Chapter 4.5 from the book

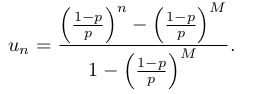

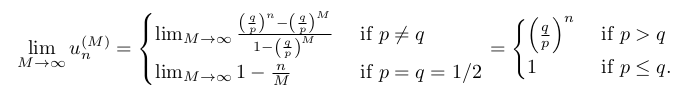

Q1. $P(X = k) = u_{k-1}-u_kS

Q2. , need to count number of ways you can sum 7 number and equal to 14 then subtract when any of the numbers is greater than 6 (could be 7 or 8 only, sum 6 numbers is minimum 6).

Q3. Geometric distribution with . Get pgf of geo + some arithmetic.

probability of winning are sum of geometric series. Mean durtion of game is 3.

Q4. Sum of alternative geometric distributions.

Q5.

- a) where and , differentiate pgf many times and evaluate at 0

- b)

Q6.

- a) ,

- b) , eval pgf at -1

- c) evaluate pgf at smart points trick

In this case the pgf evaluated at 3rd non trivial roots of unity is , the pgf is known at , so you can compute the probabilities.

Q7. Use . Then use bayesien theorem to prove the condinitonal probability is binomial distribution.

Q8. Skipped

Q9. Coupon-collecting problem, geometric distributions, pgf is known.

Q10. derivative of pgf evaluated at 1 is mean - directly from derivative of infinite sum series. for second prove need to use .

- two conditional probabilities from A and B points of view.

- distribution is something like geometric

- condition expectation. , similarly for . Answer

Q11.

- a) sum of independent Bernoulli.

- b) directly from pgf definition, pgf denies distribution uniquely

- c) last eq come from a).

Chapter 5. Continuous random variables

Recall that random variable is a function which maps the outcome space to . Discrete random variables have to be countable set.

Definition 5.2. The cumulative distribution function (cdf) of a random variable is the function defined by .

is non-decreasing.

CTS random variable:

WARNING: IS NOT A PROBABILITY.

For cts random variable for any

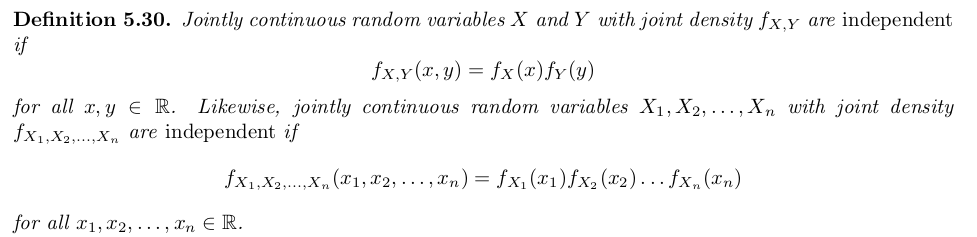

Independece in cts random variables is defined through the pdf:

But can be defined through cdf too.

NB Standard normal random variables and are independent if and only if their covariance is 0. This is a nice property of normal random variables which is not true for general random variables, as we have already observed in the discrete case.

Problems

Answers on problems from chapter 5.8 in the book.

Q1. (substitution + symmetry), - integration by parts + substitution.

Q2. for for Poisson we have , see

The number of occurrences before time λ is at least w if and only if the waiting time until the wth occurrence is less than λ.

Q3. Skipped

Q4. trick

, integrate by parts, use pdf integrates to 1

Q5. shows how to sample uniform distribution

Q6. sample any other distribution from uniform distribution

Q7. Integration question ,need to swap integrands (use Tonelli's theorem)trick

Q8. skipped, basic principles

Q9. use indicator function?

Q10. cdf is , take derivative to find pdf

Q11. integrate from zero to inf to get the constant,

Q12. need to define pdf of the angle theta and the distance from center needle to the strips. (can simulate from this problem.) wiki

Q13.

Q14. cdf is

Chapter 6. Random samples and the weak law of large numbers

Definition 6.1. Let denote i.i.d. random variables. Then these random variables are said to constitute a random sample of size from the distribution.

Let denote i.i.d. random variables with mean and variance . Then has and .

Theorem 6.6. Markov's inequality gives upper bound for a random variable to be larger than certain threshhold.

Theorem 6.7. Chebyshev's inequality tells you how far from the mean a random variable can deviate.

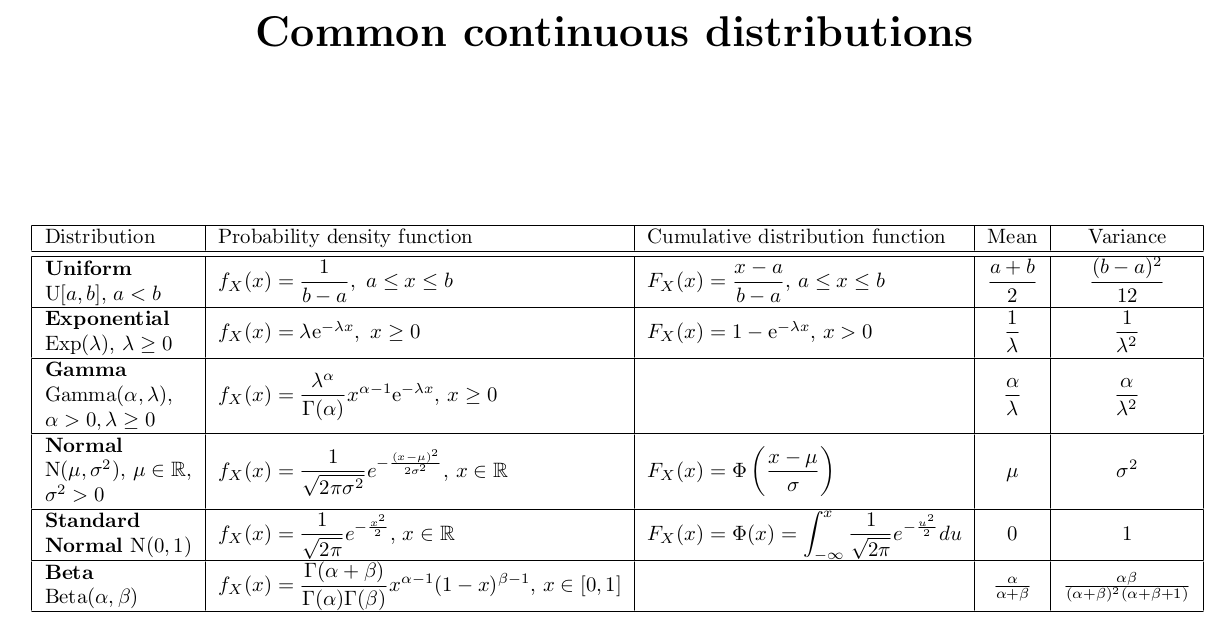

Distributions

Problem sheets answers

Sheet 1

Q1.

Q2.

Q3.

Q4. , then condition on largest element.

Q5. basic set theory holds for probability of events

Q6. a) . b)141

Q7. a) 23, b)

Q8. Inclusion exclusion principle

number of arrangements of no correct hook =

d)

Remark. probability no correct hooks converges to

Sheet 2 Q1. Example that pairwice independence does not imply independence

Q2.

Q3.

- a)

- b)

- c) Bayes from a) and b)

Q4 Use Bayes. Clarification: proportion are conservative, are liberal.

Q5.

-

a)

-

b)

Second is bigger. Intution is that in a) you reset every time, and in b) you are pulled towards balance.

Q6. Euler's formula for Riemann's zeta function.

- a)

- b) directly from definition , but for infinite number of events (pairwise dependence is not enough)

- c) if an integer is not divisibel by any prime number it muist be 1!

Sheet 3

Q1.

Q2. proof by visualization

Q3.

- a)

- b) memoryless propery of geometric distribution

Q4. Complete th exponent and use sum of pmf is 1.

Q5.

- a) for large binomial becomes poisson

- b)

Q6. 6

Q7. Coupon collector problem.

-

a) , geometric()

-

b) Geo()

-

c) harmonic series

Sheet 4

Q1.

Q2. zero covariance does not imply independence

Q3.

- a) independent so can just multiply

- b) sum of poisson independen is poisson (derive from pgf)

- c) binomial with

- use c

Q4.

- bayes, in numerator

- b) , use $pk = p{\ge k} - p_{\ge k+

Q5.

- , sum of binomial times poisson which turns out to be

Q6. from axioms?

Q7. first and second order difference equations. solvable using particular and homogeneous solutions. For particular solutions - strategy try next most complex thing.

Sheet 5

Q1. first order recurence equation.

Q2. 19

Q3.

- a)

- b)

- c) ,

Q4.

- a) sum of geometric series

- b) Take derivatives and evaluate at 1 .

Q5.

- a) condition on first two throws, second order recurence.

- b) last 3 tosses are fixed,

- c) take derivative of probability generating function

- d) hmmmm #TODO, maybe need two probabilities and use trick like in Q1?

Q6.

- a) , need to use from Q3.

- b) always need to go the whole way around, by symmetry the answer is

Sheet 6

Q1.

- a)

- b)

Q2.

- a) negative binomial - number of trials up to and including the -th success.

- b) negative binomial is sum of geometric distributions!

Q3.

- a) law of total expectation, or use Theorem 4.8 and play around with pgf derivatives evaluated at 1.

- b)

- c) no, is not longer , your proof in a) using law of total expecation uses the independence

Q4.

Q5. , ,

Q6.

- a) ,

- b)

- c) #TODO, maybe need to revisit notes, or look Miro's solution

Sheet 7

Q1. pictures of distributions

Q2. check if the integral of these functions exists

Q3.

- a) ,

- b)

Q4.

- a)

- b)

- c) Bayes theorem + a) memoryless property of exponential distribution

- d) take arcsin and use that is exponential

- e) exponential, with lower rate

- f) exponential distribution is the continuous version of the geometric distribution, capped exp is geometric. proof

Q5. substract mean, divide by std to get standard normal

- a) 0.454

- b) 0.921 - 0.454

- c)

Q6.

- dont need pdf to get expecation and variance see

- to get pdf just take derivate of cdf,

Q7.

- a) is uniform distribution, just go through definition

- b) - gi>ves a way to generate from uniform distribution

- c) thats exponential distribution, get the cdf, find its inverse and apply b)

Sheet 8

Q1. Integrate, choose constants which would make the integrals equal to 1. for independence check with marginal distributions

Q2

- a)

- b) treat using indicator variable

- c)

Q3. integrate from 0 to 1. Area under the shaded region..

Q4. chebyshev

Q5. chebyshev

Q6.

- a) ,

- b) 0 when , otherwise

- c) use b, ,

- d) ,

Q7 .WLLN